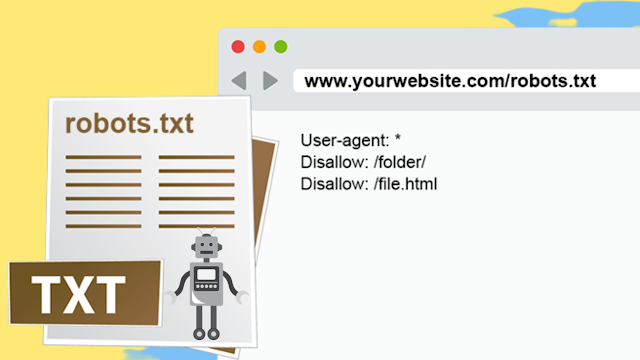

A robots.txt file is a simple text file that tells search engine crawlers which pages or sections of a website should not be indexed or scanned. The file is placed in the root directory of a website and can be accessed by appending "/robots.txt" to the domain name.

Custom robots.txt files allow website owners to specify which pages or sections of their website they do not want to be indexed by search engines. This can be useful for several reasons, such as hiding pages that are under construction or blocking pages that contain sensitive information.

The format of a robots.txt file is relatively simple. Each line consists of a user-agent (i.e. the name of a search engine crawler) followed by one or more "disallow" lines, which specify the pages or sections of the website that should not be indexed. For example, the following robots.txt file would block all search engine crawlers from indexing the "secret" directory of a website:

User-agent: *

Disallow: /secret/

Note that the "*" in the user-agent line means that this rule applies to all search engine crawlers. If a website owner wanted to block only certain search engines, they could specify the user-agent of each one they want to block.

Another example is, website owners may want to block search engines from crawling specific pages or sections of their website. For example, a website owner may want to block search engines from indexing pages that contain personal information, such as login pages or user profiles.

User-agent: *

Disallow: /my-account/

Disallow: /user-profile/

It is important to note that while robots.txt files can be used to block search engine crawlers from indexing certain pages or sections of a website, they are not a foolproof method of preventing sensitive information from being indexed. Search engines may still index pages that are blocked by robots.txt if they are linked to other sites. Additionally, malicious actors may ignore the robots.txt file and still crawl blocked pages.

In conclusion, custom robots.txt files are a useful tool for website owners who want to control which pages or sections of their websites are indexed by search engines. However, it is important to keep in mind that robots.txt files are not a foolproof method of preventing sensitive information from being indexed, and should be used in conjunction with other security measures.

0 Comments

Welcome to daily life hacks